Brighteye

In today’s rapidly evolving AI landscape, organizations face significant challenges when integrating Large Language Models (LLMs) into their workflows. Security concerns, cost management, and compliance requirements often create barriers to adoption.

Brighteye is an open-source solution designed to address these challenges by providing a secure, observable proxy layer between your applications and LLM providers like OpenAI, Anthropic, and others.

The Problem Brighteye Solves

When implementing LLMs in enterprise environments, teams often encounter several critical issues:

- Security & Access Control: How to manage API keys and access permissions across teams?

- Cost Management: How to track and control token usage to prevent unexpected costs?

- Prompt Safety: How to ensure prompts don’t contain sensitive data or violate policies?

- Observability: How to monitor usage patterns, performance, and errors?

- Multi-Provider Support: How to switch between different LLM providers without code changes?

Brighteye provides a unified solution to these challenges, offering a lightweight proxy that sits between your applications and LLM providers.

Key Features

🔐 Enterprise-Grade Security

Brighteye implements robust security features specifically designed for AI applications:

- API Key Management: Create and manage API keys with granular permissions

- Access Control: Define which models and providers each team can access

- Usage Quotas: Set token limits per user, team, or application

- Rate Limiting: Prevent abuse with customizable rate limits

🧹 Prompt Safety & Compliance

Ensure that your prompts meet organizational guidelines and compliance requirements:

- Content Filtering: Block prompts containing sensitive data patterns

- Regular Expression Rules: Define custom patterns for prompt filtering

- PII Detection: Identify and block personally identifiable information

- Audit Logging: Keep detailed records of all prompt transactions

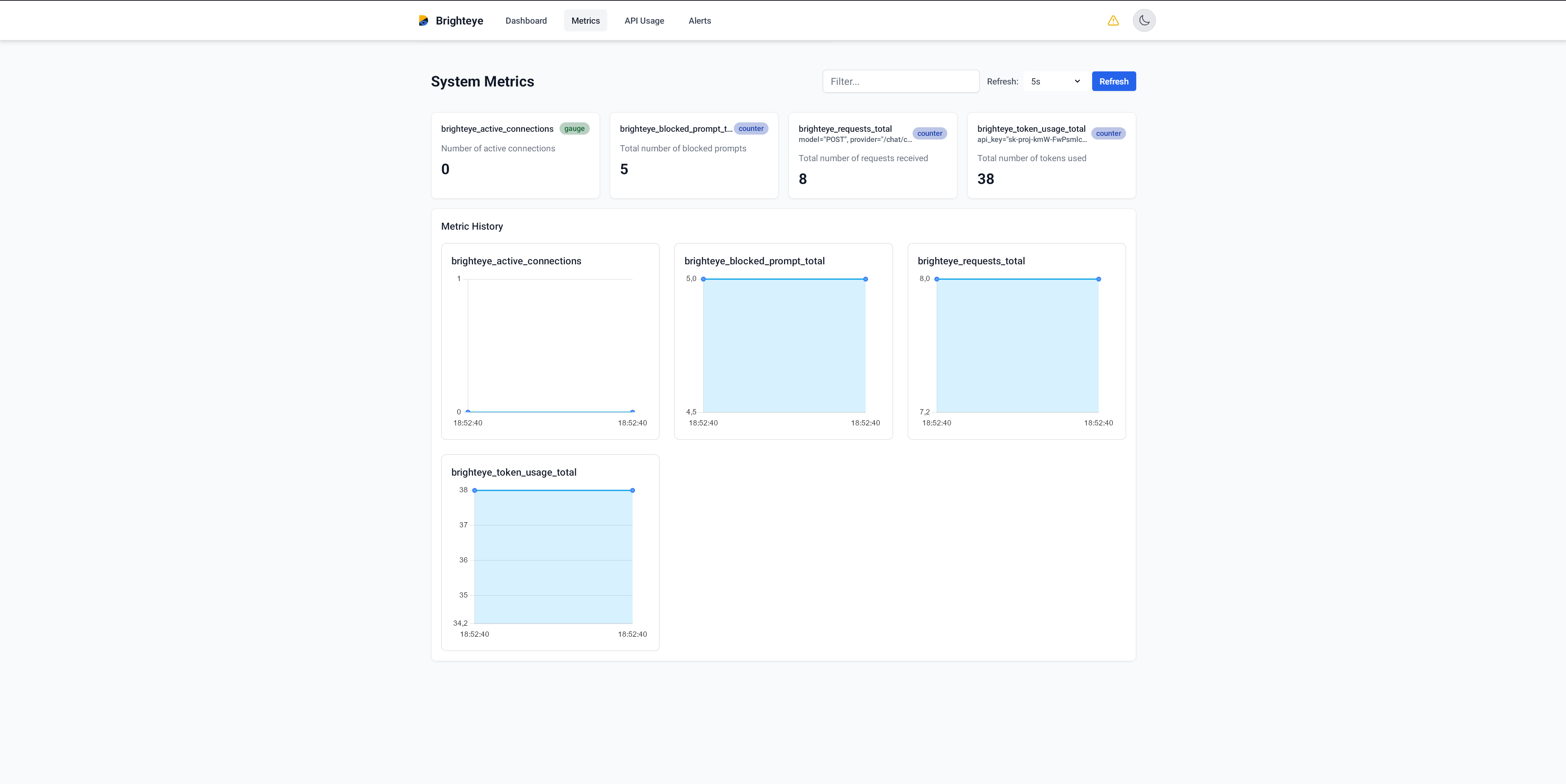

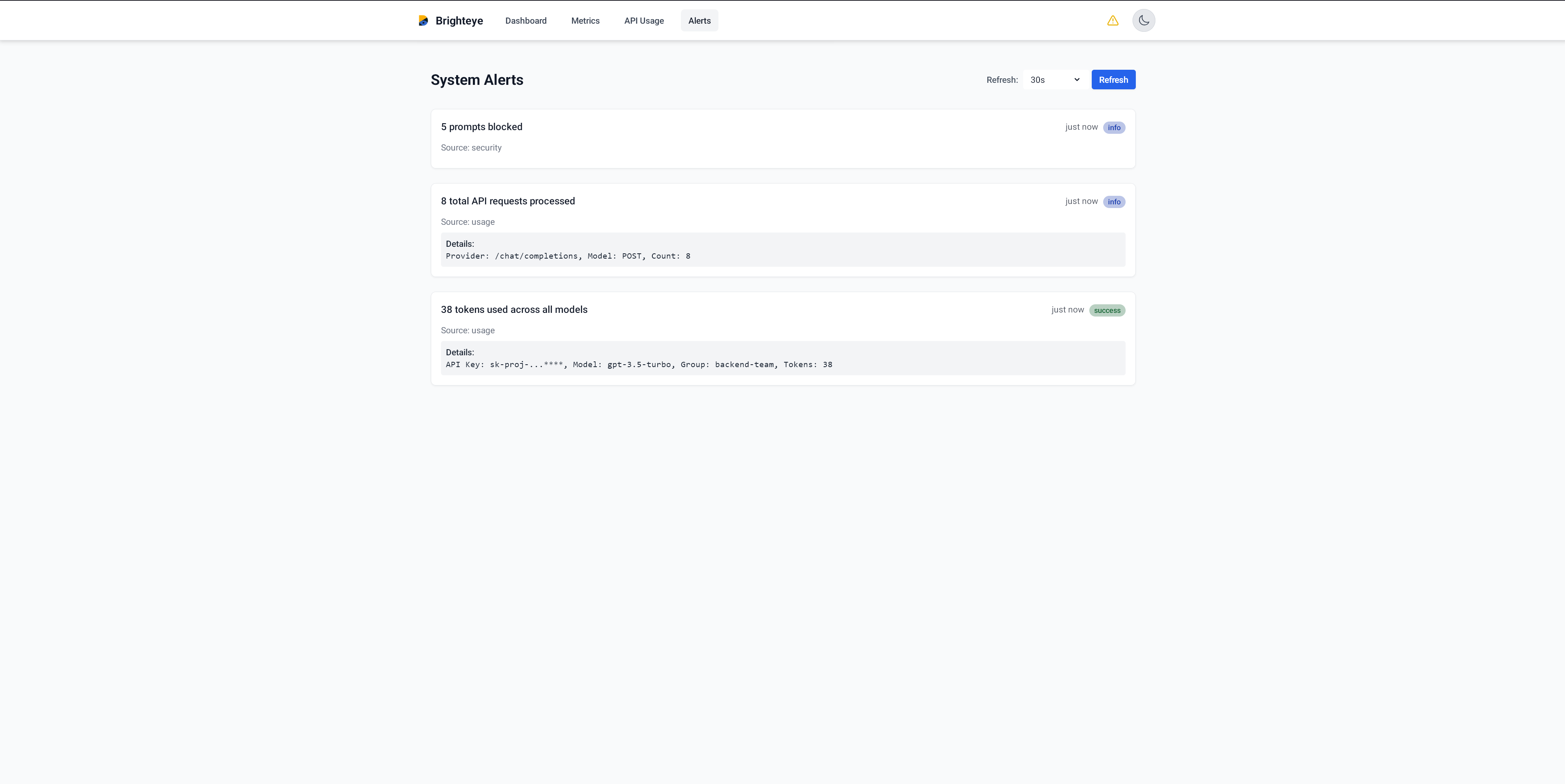

📊 Comprehensive Monitoring

Gain visibility into your LLM usage with built-in monitoring:

- Prometheus Integration: Track key metrics with Prometheus

- Beautiful Dashboard: Real-time visualization of system status and metrics

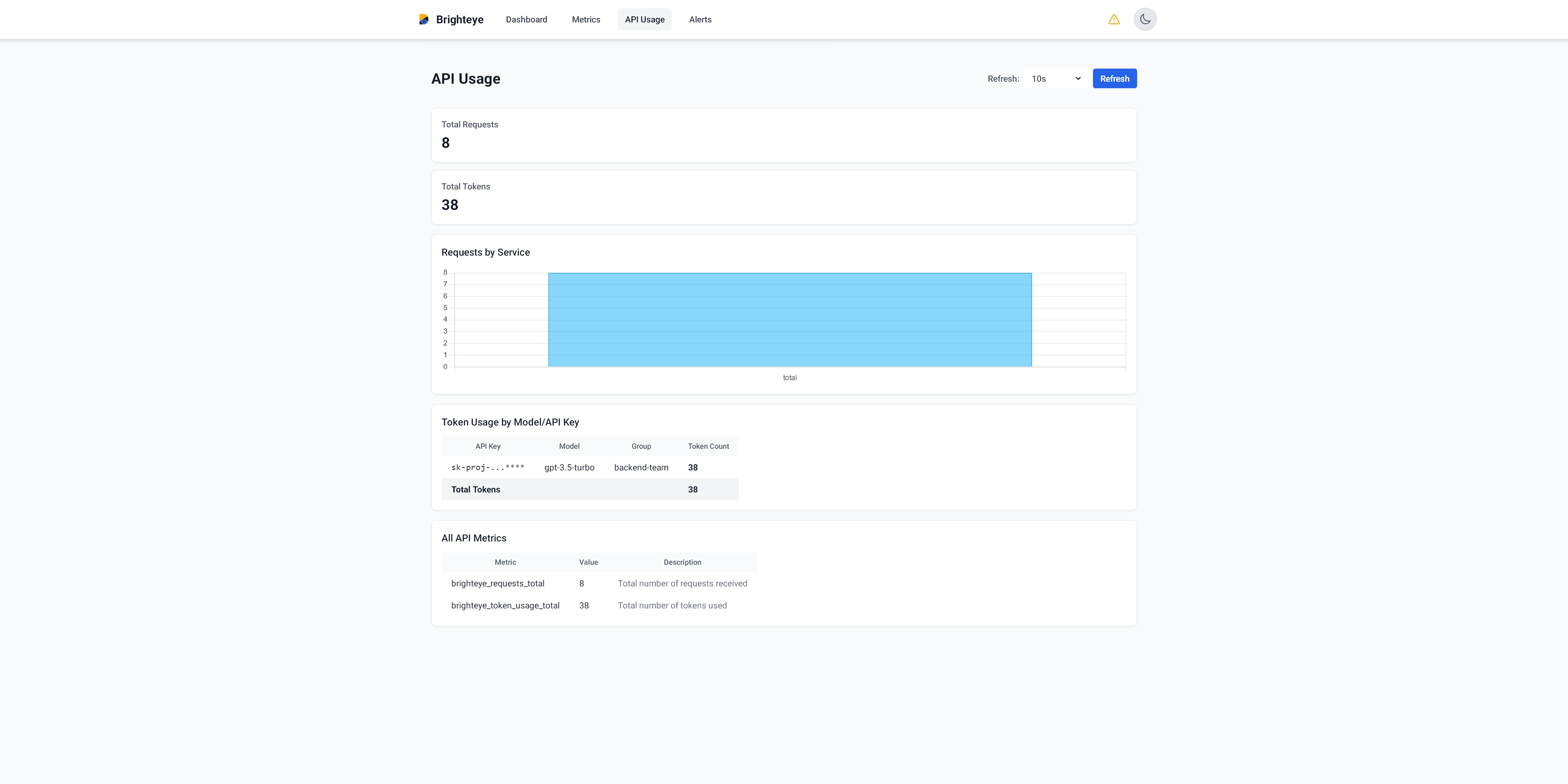

- Token Usage Tracking: Monitor costs across models and teams

- Error Monitoring: Quickly identify and troubleshoot issues

🔄 Provider Agnostic

Brighteye works with multiple LLM providers out of the box:

- OpenAI: GPT-3.5, GPT-4, and other models

- Anthropic: Claude models

- Extensible Architecture: Easily add support for additional providers

How It Works

Brighteye acts as a proxy layer between your applications and LLM providers:

- Your application sends requests to Brighteye instead of directly to the LLM provider

- Brighteye validates the request against security policies and quotas

- If the request is approved, Brighteye forwards it to the specified LLM provider

- The provider’s response is returned to your application

- All transactions are logged and metrics are collected

This architecture provides several benefits:

- Centralized Control: Manage all LLM access through a single point

- Consistent Policies: Apply the same security rules across all applications

- Easy Integration: No major code changes required in your applications

- Scalability: Horizontal scaling to handle high volumes of requests

Getting Started

Installation

# Pull the API container from GitHub Container Registry

docker pull ghcr.io/mehmetymw/brighteye:latest

# Pull the UI container from GitHub Container Registry

docker pull ghcr.io/mehmetymw/brighteye-ui:latest

# Run the API container

docker run -d \

--name brighteye\

-p 1881:1881 \

-v $(pwd)/config.yaml:/app/config.yaml \

ghcr.io/mehmetymw/brighteye:latest

# Run the UI container

docker run -d \

--name brighteye-ui \

-p 3000:3000 \

-e METRICS_URL=http://localhost:1883/metrics \

ghcr.io/mehmetymw/brighteye-ui:latest

Basic Configuration

Edit the config.yaml file to configure your API keys and policies:

providers:

openai:

api_key: "your-openai-api-key"

anthropic:

api_key: "your-anthropic-api-key"

api_keys:

- key: "frontend-team-key"

name: "Frontend Team"

group: "dev-team"

rate_limit: 100

daily_token_quota: 100000

allowed_models: ["gpt-3.5-turbo", "claude-instant-1"]

blocked_patterns:

- pattern: "credit card number|ssn|social security"

description: "PII detection"

Making Your First Request

Once configured, you can make requests through Brighteye:

curl -X POST http://localhost:1881/chat/completions?provider=openai \

-H "Authorization: Bearer frontend-team-key" \

-H "Content-Type: application/json" \

-d '{

"model": "gpt-3.5-turbo",

"messages": [

{"role": "user", "content": "What is the capital of France?"}

]

}'

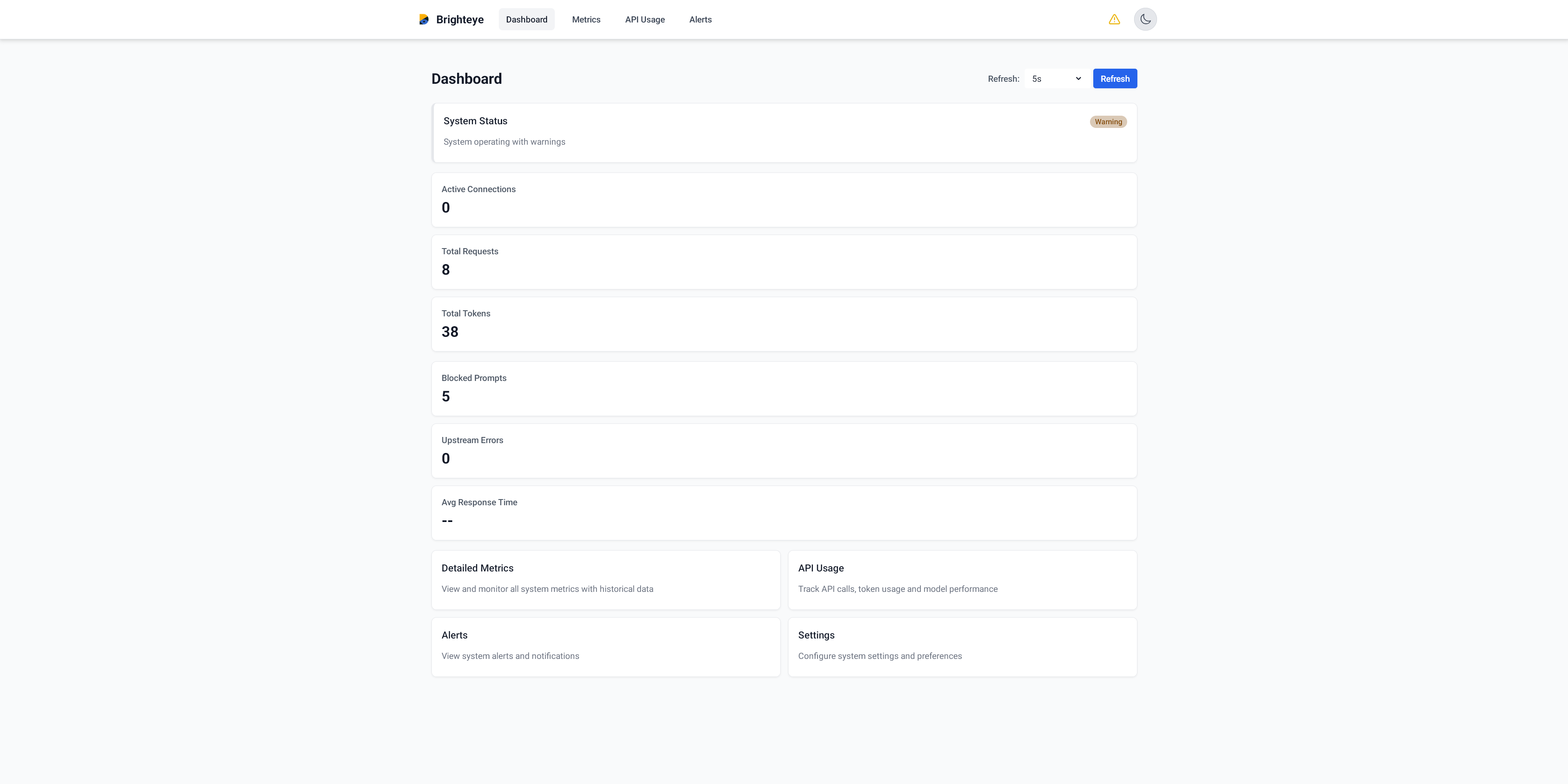

The Dashboard

Brighteye includes a modern dashboard built with React that provides real-time insights into your LLM usage:

- System Status: Overall health and status of the proxy

- Token Usage: Charts showing token consumption by model and team

- Request Volume: Track request patterns over time

- Errors & Alerts: Quickly identify issues in your LLM integration

The dashboard is accessible at http://localhost:3000 after starting Brighteye.

Use Cases

Enterprise AI Governance

For enterprises implementing AI across multiple teams, Brighteye provides the governance layer needed to ensure security and compliance while enabling innovation.

Controlling AI Costs

By setting token quotas and monitoring usage, organizations can prevent unexpected costs from unconstrained LLM usage.

Multi-Provider Strategy

Organizations looking to leverage multiple LLM providers can use Brighteye to abstract away provider-specific details and switch between providers as needed.

Developer Self-Service

Enable development teams to use LLMs safely without requiring direct access to provider API keys.

The Road Ahead

Brighteye is an active open-source project with ongoing development planned for:

- Additional LLM provider integrations

- Enhanced security features

- Advanced prompt filtering capabilities

- More detailed analytics and reporting

- Role-based access control

Contributing

Brighteye is open source, and contributions are welcome! Whether it’s adding new features, improving documentation, or reporting bugs, your help is appreciated.

Visit our GitHub repository to get started.

Conclusion

As LLMs become increasingly critical to business operations, the need for secure, manageable integration grows. Brighteye provides the missing layer between your applications and LLM providers, enabling safe, cost-effective AI adoption.

Try Brighteye today and take control of your LLM infrastructure!